How to Evaluate AI Use Cases That Actually Move the Needle

Updated:

4 minutes

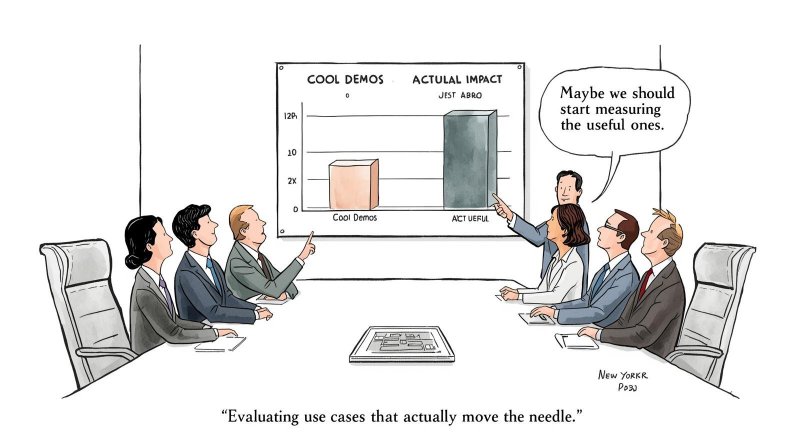

Companies are experimenting with AI everywhere. They’re adding chatbots, automating emails, building “smart dashboards,” or sprinkling generative features into existing products. And yet, very few of these projects actually move the needle.

The problem isn’t AI itself. It’s the way organizations choose which problems to solve. Most teams chase shiny demos, not business impact. The result? Projects that look impressive on launch day but fade quickly, generating little value and almost no learning.

This post provides a practical framework to evaluate AI use cases so your team invests in experiments that deliver measurable outcomes — and build lasting capabilities in the process.

Why Most AI Projects Fail

Before diving into the framework, it’s worth understanding why so many AI initiatives stall:

- Random experimentation: Teams pick “cool” features rather than high-value problems.

- Lack of metrics: Success is measured by completion or feature delivery, not by outcomes.

- Overlooking dependencies: Data quality, infrastructure, and operational requirements aren’t considered upfront.

- Short-term thinking: Quick demos look impressive but rarely compound into reusable capabilities.

Take a SaaS product that adds AI-generated chart summaries. Users might admire the feature, but adoption is low, and the team learns very little that can be applied elsewhere. That’s a feature, not a capability. This is the core distinction I explored in The AI Strategy Gap: adding AI features isn’t the same as building AI capabilities.

A Practical Framework for Evaluating AI Use Cases

Here’s a six-step framework to help prioritize AI projects that actually deliver impact:

Define the Strategic Objective. Start by tying every AI use case to a specific business outcome. Ask: “If we succeed, what changes for the business?”

- Example: reduce churn, improve retention, increase operational efficiency, or speed up decision-making.

- Without a clear outcome, even technically impressive projects can fail to deliver value.

Assess Feasibility. Not all AI projects are technically or operationally realistic. Consider:

- Data readiness: Do you have the right data, at the right quality and scale?

- Technical complexity: Can the team build, test, and deploy this in a reasonable timeframe?

- Operational fit: Can the AI output be integrated into existing workflows without friction?

Feasibility helps avoid projects that look good on paper but are impossible to execute reliably.

Estimate Potential Impact. Impact is more than a gut feeling. Quantify it wherever possible:

- Direct metrics: revenue, cost savings, or time saved.

- Indirect metrics: enabling new workflows, improving customer experience, or informing future decisions.

Think beyond immediate wins. AI use cases that generate repeatable value are often more impactful than those with flashy outputs.

Evaluate Risk. Every AI project carries risk. A practical evaluation considers:

- Model risk: errors, bias, or unpredictable behavior.

- Dependency risk: reliance on external APIs or third-party models.

- Operational risk: maintenance, monitoring, and human-in-the-loop needs.

A high-impact project that’s too risky or costly to operate may be less valuable than a slightly smaller, more reliable opportunity.

Check for Compounding Value. Ask yourself: “Will this use case generate reusable insights or capabilities?”

- Does it help build internal AI know-how?

- Can the data, models, or feedback loops be applied to future projects?

Projects that compound learning build AI capabilities — which ultimately matter more than isolated features.

Score & Prioritize. Finally, use a simple scoring approach:

(Impact × Strategic Fit × Feasibility) ÷ RiskRank candidates to focus on a few high-leverage projects rather than chasing every AI trend. This ensures resources go toward initiatives that deliver measurable outcomes and long-term advantage.

Example in Action

Imagine a SaaS analytics platform considering two AI projects:

- Auto-generating chart summaries

- Easy to implement, looks impressive in demos, but adoption is low.

- Minimal impact on retention or revenue.

- Predicting churn based on usage patterns

- Moderate complexity, requires historical data and feature engineering.

- High strategic impact: reduces churn, improves customer retention, and builds predictive capability for other products.

Using the framework, project 2 clearly scores higher. Not only does it move the needle, it also builds AI capabilities the company can reuse across workflows.

Key Takeaways

- Not every AI experiment is worth running.

- Evaluate projects based on outcomes, feasibility, risk, and compounding value.

- Prioritize a few high-leverage projects over many low-impact demos.

- Remember: building capabilities beats building features — the use cases you choose today shape the AI advantage you have tomorrow.

AI isn’t just a feature you sprinkle in your product. The right projects, selected thoughtfully, turn AI into a strategic advantage that compounds over time.