How to Build a Product Org That Can Actually Ship AI

Updated:

4 minutes

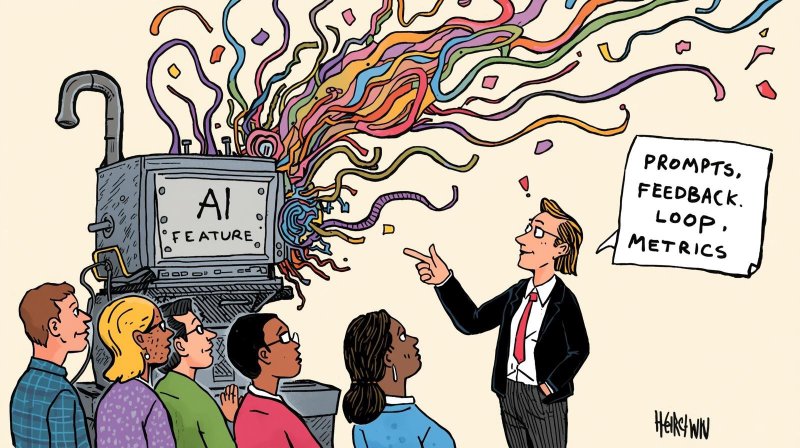

Every company wants AI. Most teams end up running a feature factory. It’s easy to experiment: spin up a prompt, test a model, watch it generate something interesting. The thrill of the new is intoxicating. But few teams know how to turn those experiments into reliable, shippable AI products. The problem isn’t enthusiasm or ambition — it’s that most orgs lack the cross-functional muscle to ship AI responsibly and repeatedly.

This is part of the same pattern I explored in The AI Strategy Gap: adding AI features isn’t the same as building AI capabilities. The feature factory isn’t hype or laziness. It’s a symptom. It often starts with the assumption that “AI-first” means “chat-first” — defaulting to chatbot interfaces instead of thinking deeply about how intelligence should be embedded into workflows.

Why shipping AI is different

Traditional product orgs are built for deterministic features. A new workflow, a button, or an integration can be coded, tested, and shipped with predictable results. AI doesn’t work that way. Its outputs are probabilistic, context-sensitive, and constantly evolving. Deploying a frontier AI model — whether GPT-5, Claude, or another state-of-the-art system — is just the beginning.

Teams suddenly have to answer questions they never considered before. Who owns the quality of the output? Who ensures the prompts or input data are accurate? Who mitigates hallucinations or unsafe suggestions? Without a framework for tackling these challenges, AI features become flashy one-offs: impressive in demos, disappointing in production. Enthusiasm is there; repeatable capability is not.

Building the muscle

Shipping AI doesn’t require building custom models. What matters is alignment, collaboration, and shared ownership across Product × AI Model × Design.

The AI product manager owns not just the feature, but the performance envelope: evaluation metrics, error budgets, and integration into the product experience. Engineers and prompt specialists ensure that models are integrated safely, outputs are monitored, and pipelines remain stable. Designers craft the human–AI interaction, shaping how users engage with AI features and building feedback loops to catch issues early.

Success comes from structured rituals, clear responsibilities, and shared tooling. OKRs tie AI experiments to outcomes — but only if you’re choosing the right experiments to begin with. A practical framework like the one in How to Evaluate AI Use Cases That Actually Move the Needle helps ensure your team invests in projects that deliver measurable outcomes, not just flashy demos. Dashboards make model behavior transparent. Regular reviews ensure decisions are visible and accountable. The key is coordination and process, not proximity or physical setup.

Discipline over magic

Even using frontier models rather than custom models, shipping AI reliably requires operational rigor. Outputs need constant evaluation, human-in-the-loop quality checks, and versioned prompts to ensure changes don’t break production behavior. Lightweight AI enablement teams can help multiple squads maintain consistency and reduce friction.

The discipline of shipping AI isn’t glamorous. It’s not about impressing stakeholders with flashy demos. It’s about building processes that make outputs reliable, safe, and repeatable. Without this discipline, the team will remain trapped in the cycle of the feature factory.

Governance without gatekeeping

Safety and compliance are often seen as blockers, but the best AI orgs integrate governance into their everyday workflow. Automated compliance checks, lightweight review boards, and embedded guardrails allow teams to move fast without leaving safety behind. The principle is simple: guardrails, not gates. Safety should be built into the way the team works, not added as an afterthought.

Culture: curiosity meets control

Finally, shipping AI at scale is a cultural challenge. High-performing teams prototype aggressively but ship responsibly. They cultivate shared literacy in AI behavior, evaluation metrics, and data ethics. “AI champions” spread knowledge across the org, preventing silos and building confidence. Success is measured not by the flashiest feature, but by the ability to deliver repeatable, reliable AI outcomes.

From feature factory to AI machine

AI won’t replace your product org. But the orgs that learn to ship AI reliably and responsibly — even using frontier models — will replace those that don’t.

If you’re staring at a whiteboard full of AI experiments, ask yourself: are these prototypes building muscle, or just generating noise? The difference is your ability to turn AI into repeatable, high-value features. That is the true competitive advantage.

Building AI capabilities — the shared foundations, reusable components, and cross-functional fluency I described in The AI Strategy Gap — requires exactly this kind of organizational discipline. Without it, you’ll keep shipping features while your competitors build compounding advantages.